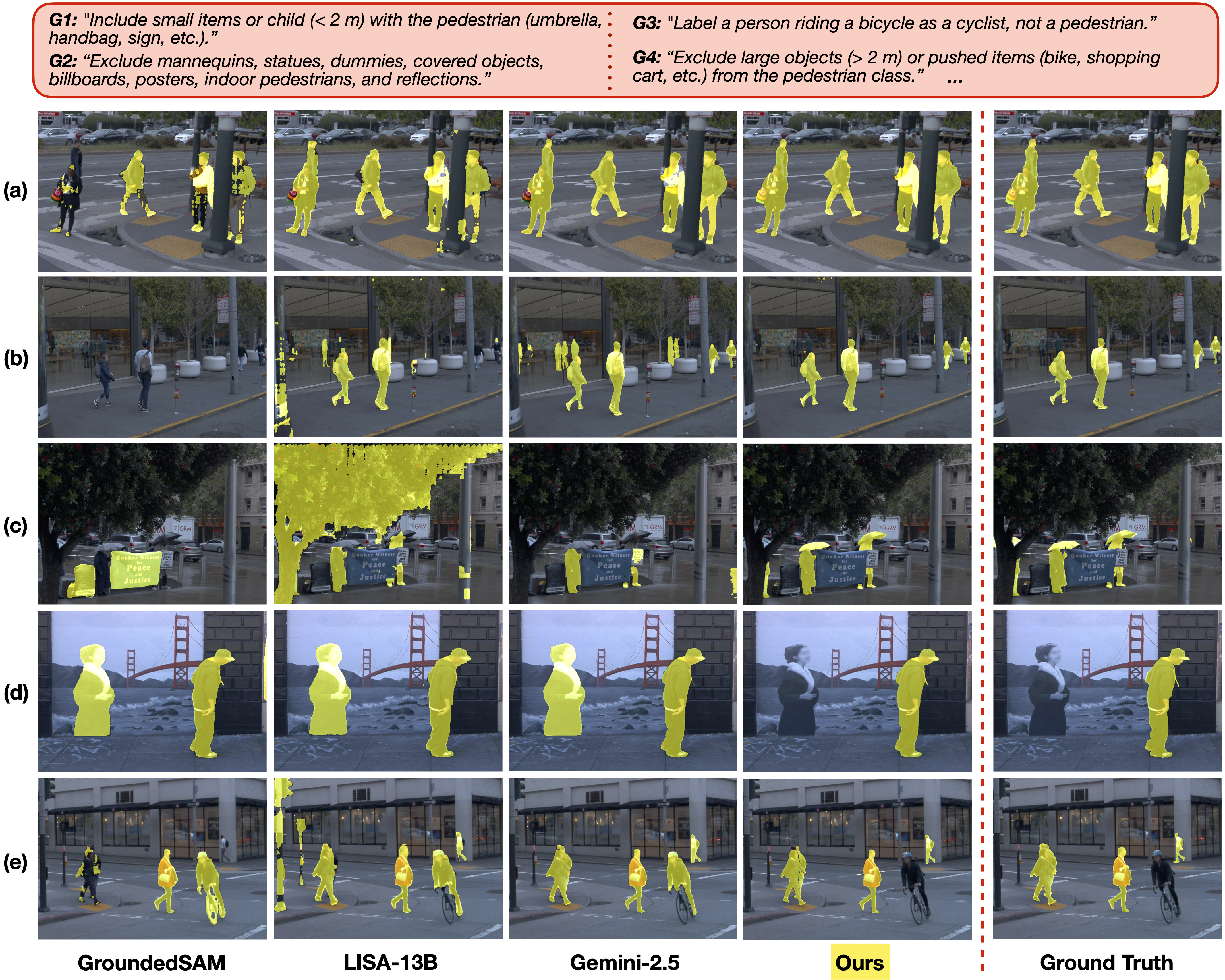

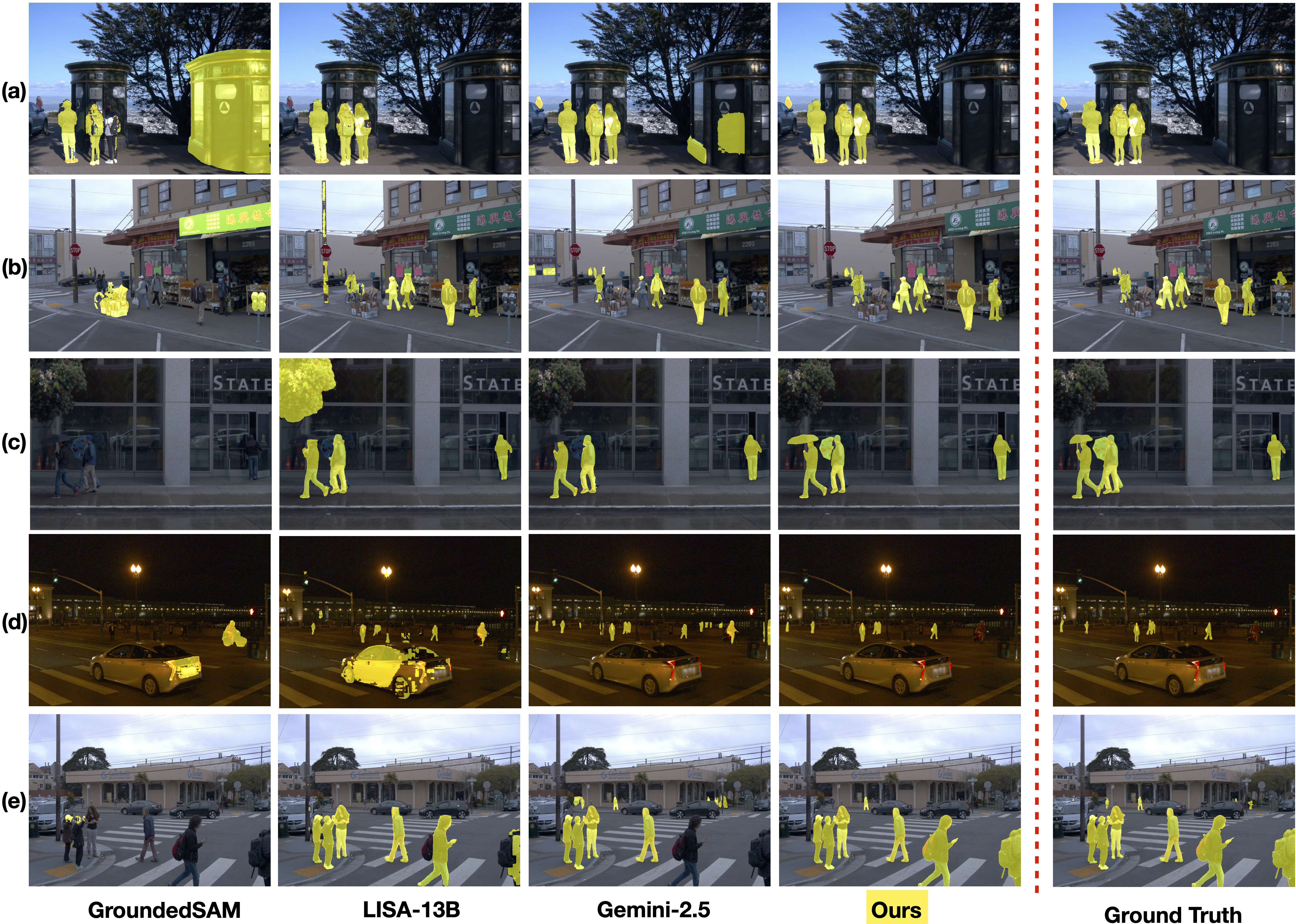

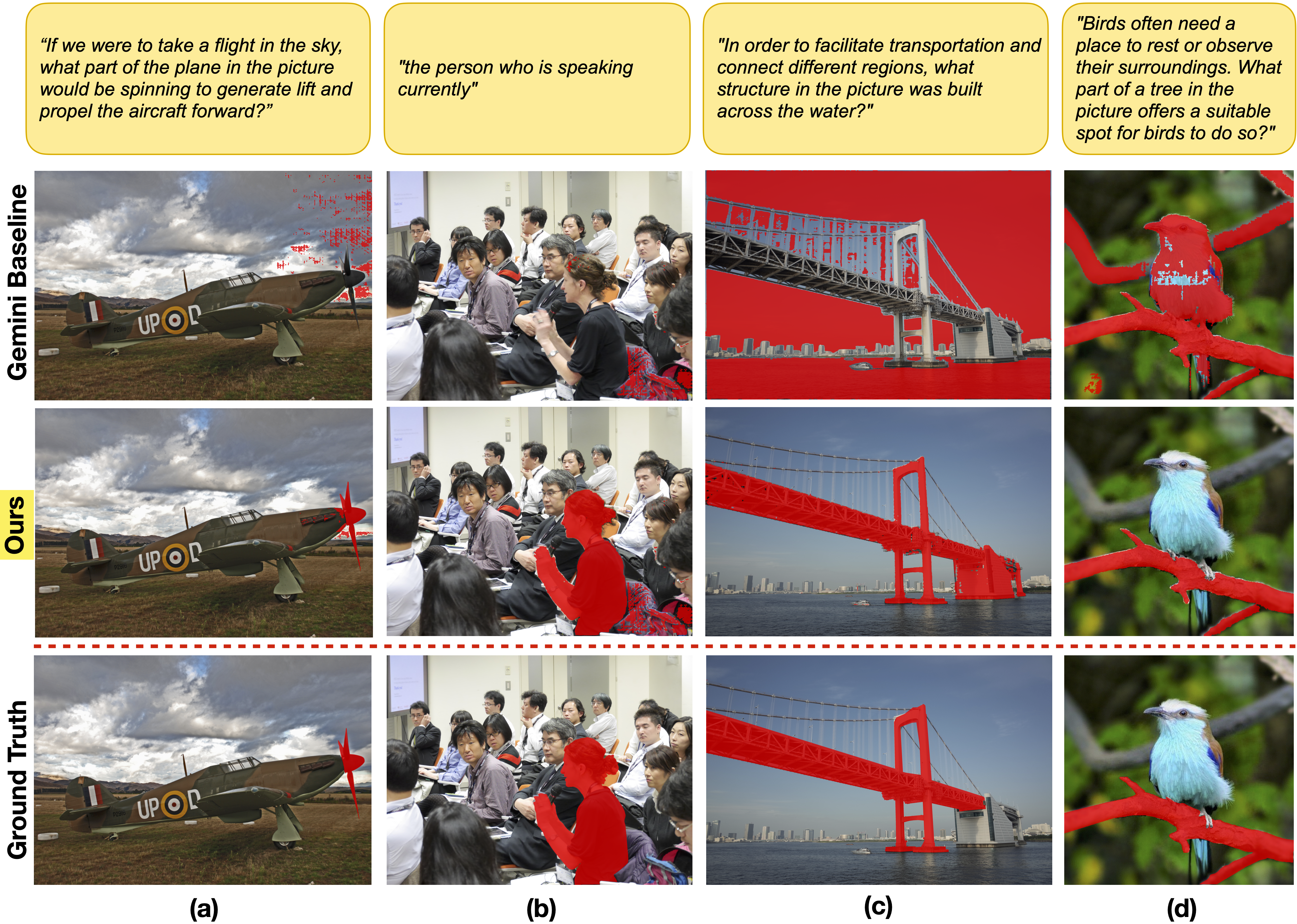

Semantic segmentation in real-world applications often requires not only accurate masks but also strict adherence to textual labeling guidelines. These guidelines are typically complex and long, and both human and automated labeling often fail to follow them faithfully. Traditional approaches depend on expensive task-specific retraining that must be repeated as the guidelines evolve. Although recent open-vocabulary segmentation methods excel with simple prompts, they often fail when confronted with sets of paragraph-length guidelines that specify intricate segmentation rules. To address this, we introduce a multi-agent, training-free framework that coordinates general-purpose vision-language models within an iterative Worker-Supervisor refinement architecture. The Worker performs the segmentation, the Supervisor critiques it against the retrieved guidelines, and a lightweight reinforcement learning stop policy decides when to terminate the loop, ensuring guideline-consistent masks while balancing resource use. Evaluated on the Waymo and ReasonSeg datasets, our method notably outperforms state-of-the-art baselines, demonstrating strong generalization and instruction adherence.

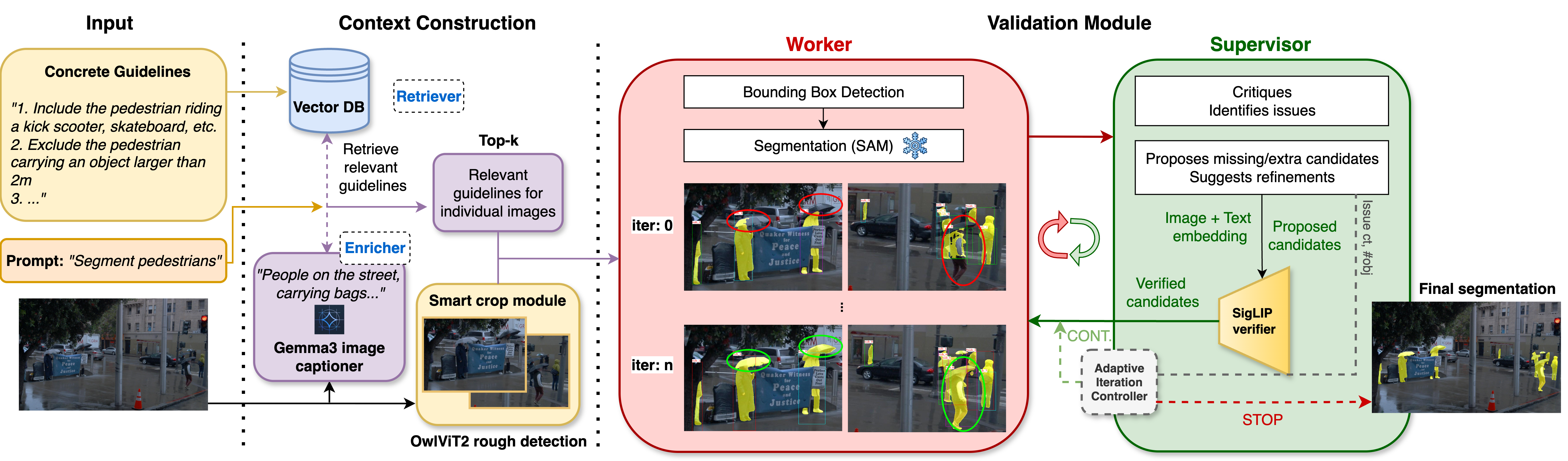

The input image and textual guidelines undergo context construction to extract scene-relevant rules. This focused context is processed through an iterative VLM loop where the Worker segments and the Supervisor critiques and suggests improvements. The number of iterations is controlled by an adaptive iteration controller. The final output is a segmentation that faithfully adheres to long and detailed guidelines.

Our results follow directly from the design. First, the context construction via image-specific guideline retrieval prevents instruction overload, so the VLM reasons over only the rules relevant to the scene, explaining robustness on long guidelines. Smart crop improves initial detections of small or distant instances. Second, the Worker-Supervisor decomposition allows iterative correction of missed items and false positives. Third, AiRC adaptively stops refinement to balance accuracy and resource usage, avoiding premature stops or unnecessary iterations. Finally, keeping VLM and SAM frozen avoids dataset-specific overfitting, working unchangeably for both Waymo and ReasonSeg datasets.

@article{vats2025guidelineseg,

author = {Vats, Vanshika and Rathee, Ashwani and Davis, James},

title = {Guideline-Consistent Segmentation via Multi-Agent Refinement},

journal = {AAAI Conference on Artificial Intelligence},

year = {2026},

}